Above, you can view an exhibition game played between the best evolved Pacman agents and random Ghost agents.

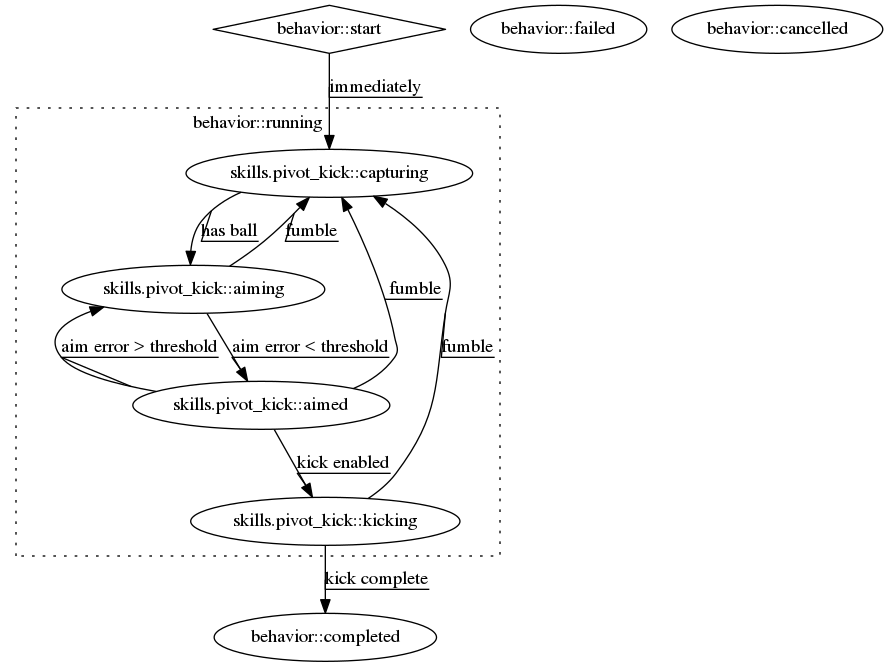

Using evolutionary computing methods, this self-playing Pacman agent uses a Koza-tree style algorithm to determine the next best possible action to take.

In this project, the objective is to evolve the best possible Pacman agent to play the game--in other words, find the agent with the highest fitness. The fitness function is based on the score of the game, with a higher score resulting in a higher fitness. First, I generate an initial population of random Pacman agents, and then evolve them over a number of generations. At the final generation, the agent with the highest fitness is selected as the best agent.

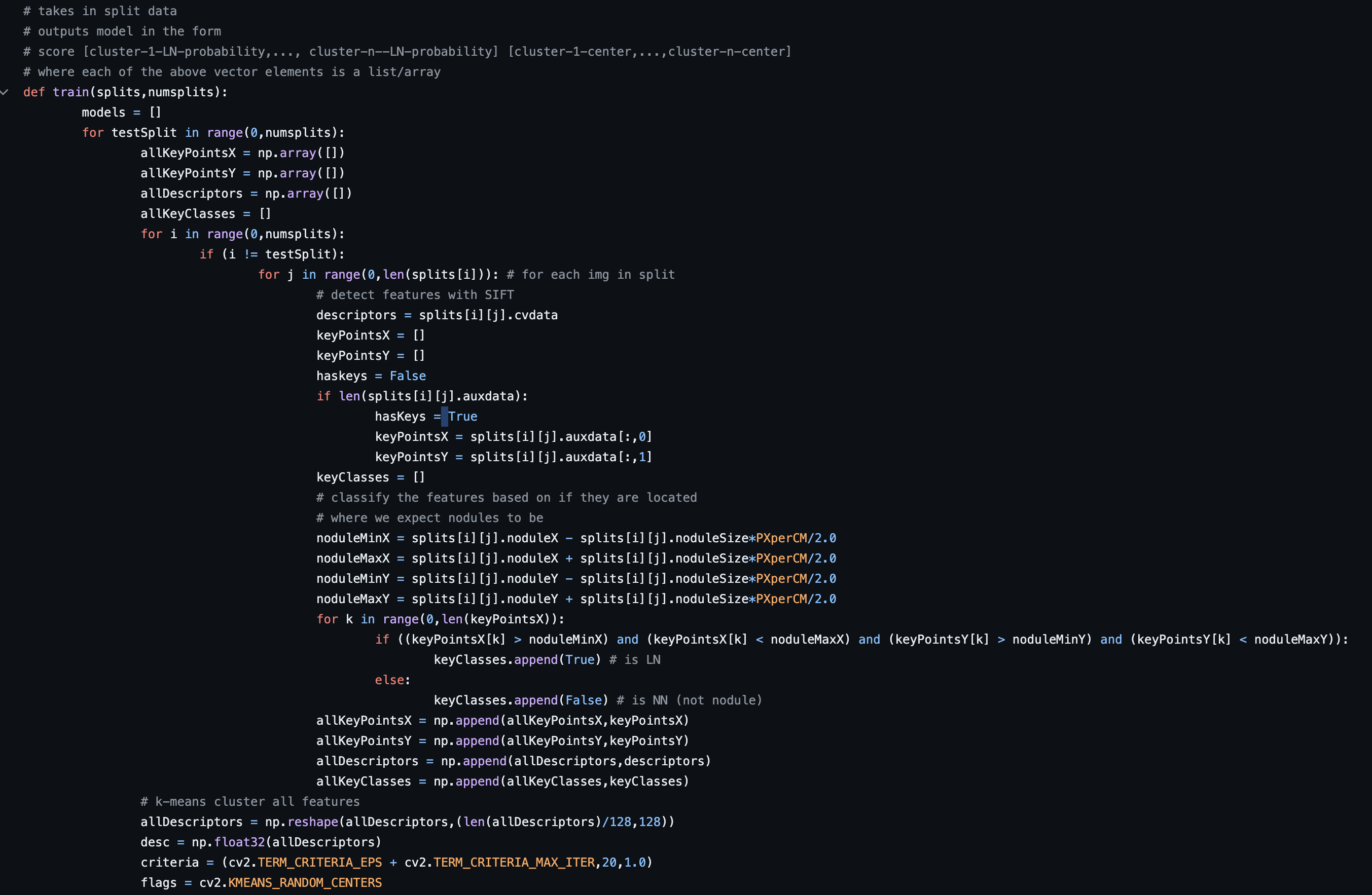

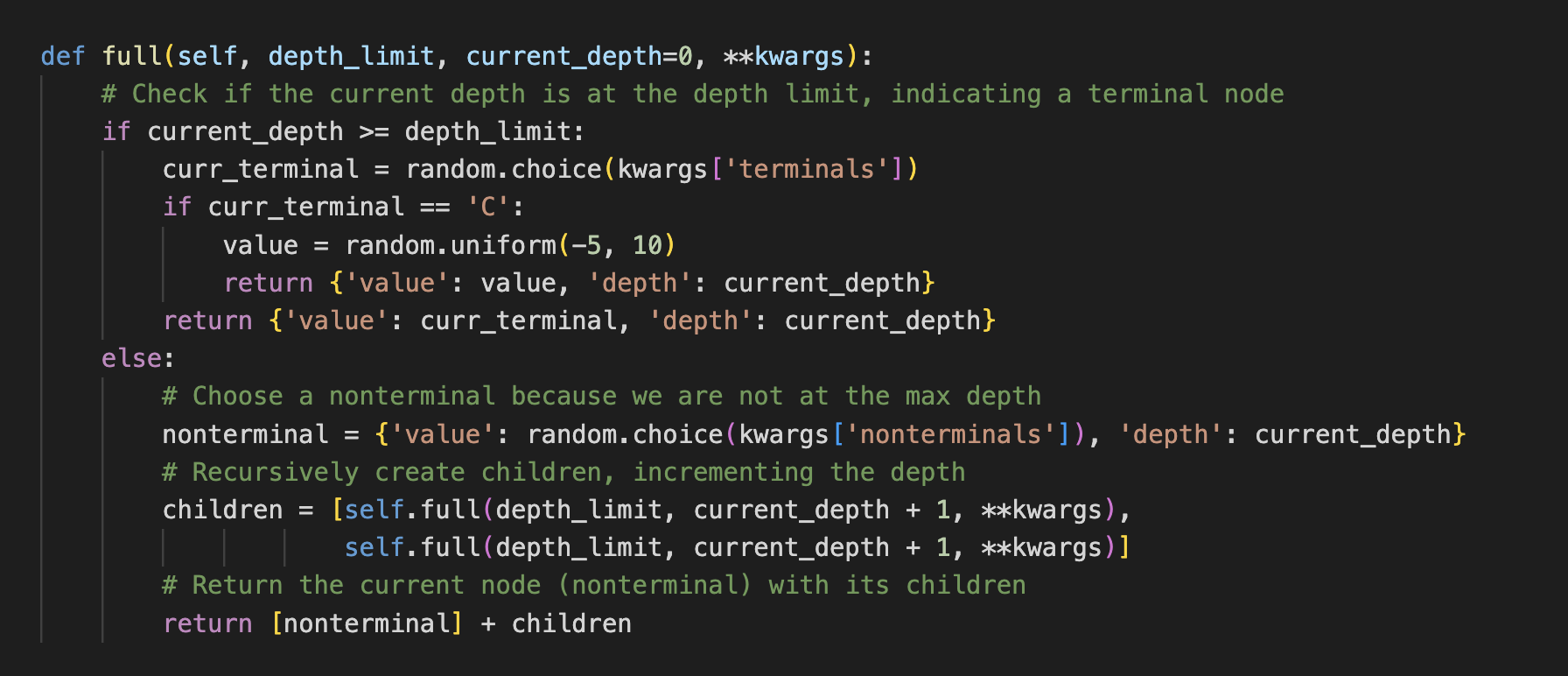

The initial population's genes are generated randomly through ramped half-and-half initialization. Each individual represents a tree of actions that the agent can take, with each node representing an action and each leaf representing a terminal state. The actions are based on the possible actions that Pacman can take in the game, and the terminal states are based on the possible states that Pacman can be in. Each individual is also assigned an initial fitness value based on the fitness function (in other words, how well it plays the game).

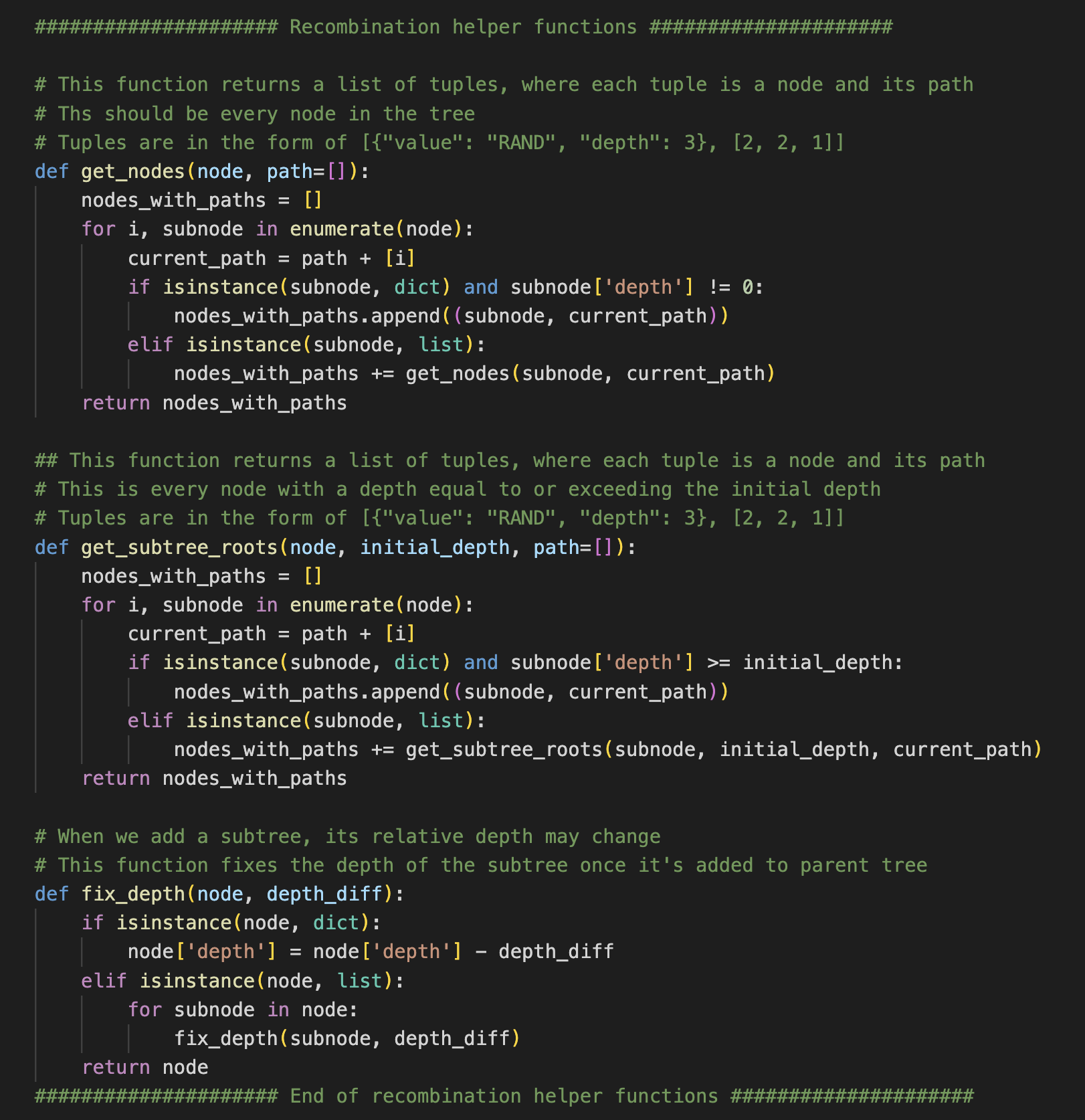

The genes are then recombined and mutated through subtree mutation, subtree crossover, and point mutation. We use a fitness-based selection method (k-tournament without replacement) to select the survivors and therefore also reproduce for the next generation. A penalty function based on the depth of the tree is used to encourage smaller trees and thus more efficient agents.

This process is repeated for a number of generations, and the agent with the highest fitness at the end is selected as the best agent.

It should be noted that these methods can be used to evolve mutliple agents at a time, and are also able to be used for the Ghost agents. In fact, we also investigated evolving Ghost agents and Pacman agents against each other, and used competitive coevolution to evolve the best Ghost and Pacman agents.